Why Are Video Captions Essential for Success in 2025? Complete Guide

%20(1).png)

Captions are no longer optional—they're the cornerstone of a successful video strategy in 2025, with AI-powered captioning technology reaching 95% accuracy and the video captioning market exploding from USD 2.1 billion in 2024 to USD 6.5 billion by 2033, driven by accessibility mandates, muted autoplay consumption, and global content localization needs. Modern video editing platforms with instant captioning capabilities have become standard, with creators who skip subtitles risking significant audience loss and revenue reduction in an increasingly competitive digital landscape.

This comprehensive guide reveals why captions are essential, explores cutting-edge AI-powered captioning technology, and provides actionable strategies to integrate instant captioning into your workflow for maximum engagement and compliance.

Why Are Captions Essential for Video Success in 2025?

Captions deliver measurable value through four critical pillars: engagement optimization, legal compliance, search visibility, and brand consistency, with these elements working together to create a competitive advantage that directly impacts your bottom line.

The data is compelling: over 70% of viewers prefer videos with captions, while 1.5 billion people globally have hearing impairments, according to the World Health Organization's Global Report on Health Equity. For creators and brands, this translates to expanded reach, reduced legal risk, and improved content performance across all platforms.

How Do Captions Drive Engagement for Silent Viewers?

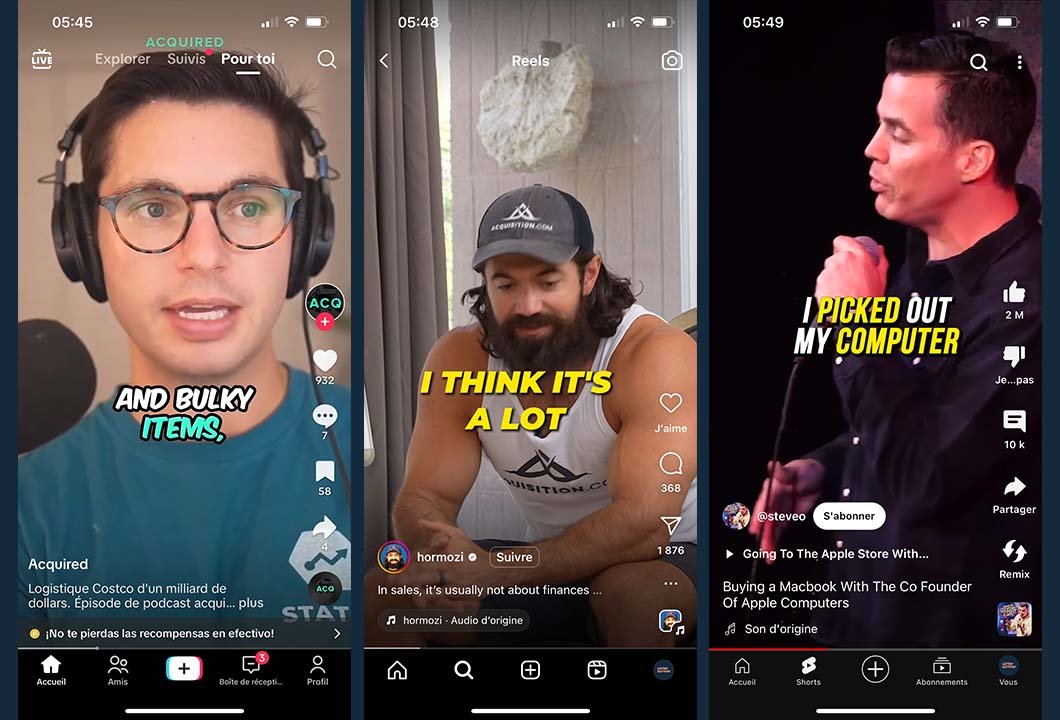

Most social media videos autoplay without sound, making captions the first—and often only—way viewers interact with your content, fundamentally changing how audiences discover and engage with video content in 2025.

Videos with captions see up to 30% longer average watch time compared to uncaptioned content. The 70% viewer preference for captions isn't just about accessibility—it's about creating an inclusive viewing experience that captures attention in sound-sensitive environments like offices, public transport, and late-night browsing.

Caption engagement benefits by viewing context:

According to Facebook's video consumption analytics, 85% of video content is consumed without sound, making captions essential for initial audience capture and retention.

What Are the Legal Requirements for Video Accessibility?

Accessibility refers to designing products usable by people with disabilities, while the Americans with Disabilities Act (ADA) legally requires captioned video content for public services and businesses, making ADA compliance a legal necessity that protects organizations from costly lawsuits.

Non-compliance risks extend far beyond legal fees, with brands facing potential lawsuits averaging $250,000 annually, plus reputation damage from accessibility failures.

Global accessibility compliance requirements:

Forward-thinking organizations view captions as insurance against litigation while demonstrating commitment to inclusive design and social responsibility.

How Do Captions Boost SEO Through Searchable Transcripts?

Captions create indexable text that search engines use to understand video content, dramatically improving organic reach and keyword rankings through enhanced content discoverability.

Search algorithms can't watch videos—they rely on transcripts to categorize and surface content to relevant audiences.

Searchable transcripts can lift keyword rankings by up to 20%, according to recent SEO research from Search Engine Journal's video optimization studies. This organic boost compounds over time, creating long-term visibility advantages for captioned content across YouTube, Google, and social platforms.

SEO benefits of video captions include:

- Enhanced content indexing with search engines reading caption text as part of page content

- Long-tail keyword opportunities from natural speech patterns captured in video transcripts

- Improved dwell time signals through longer engagement that indicates content quality to algorithms

- Featured snippet potential from transcribed content appearing in Google's answer boxes

- Cross-platform discoverability improving visibility across YouTube, Google Video, and social media searches

Why Is Brand Consistency Crucial Across Video Platforms?

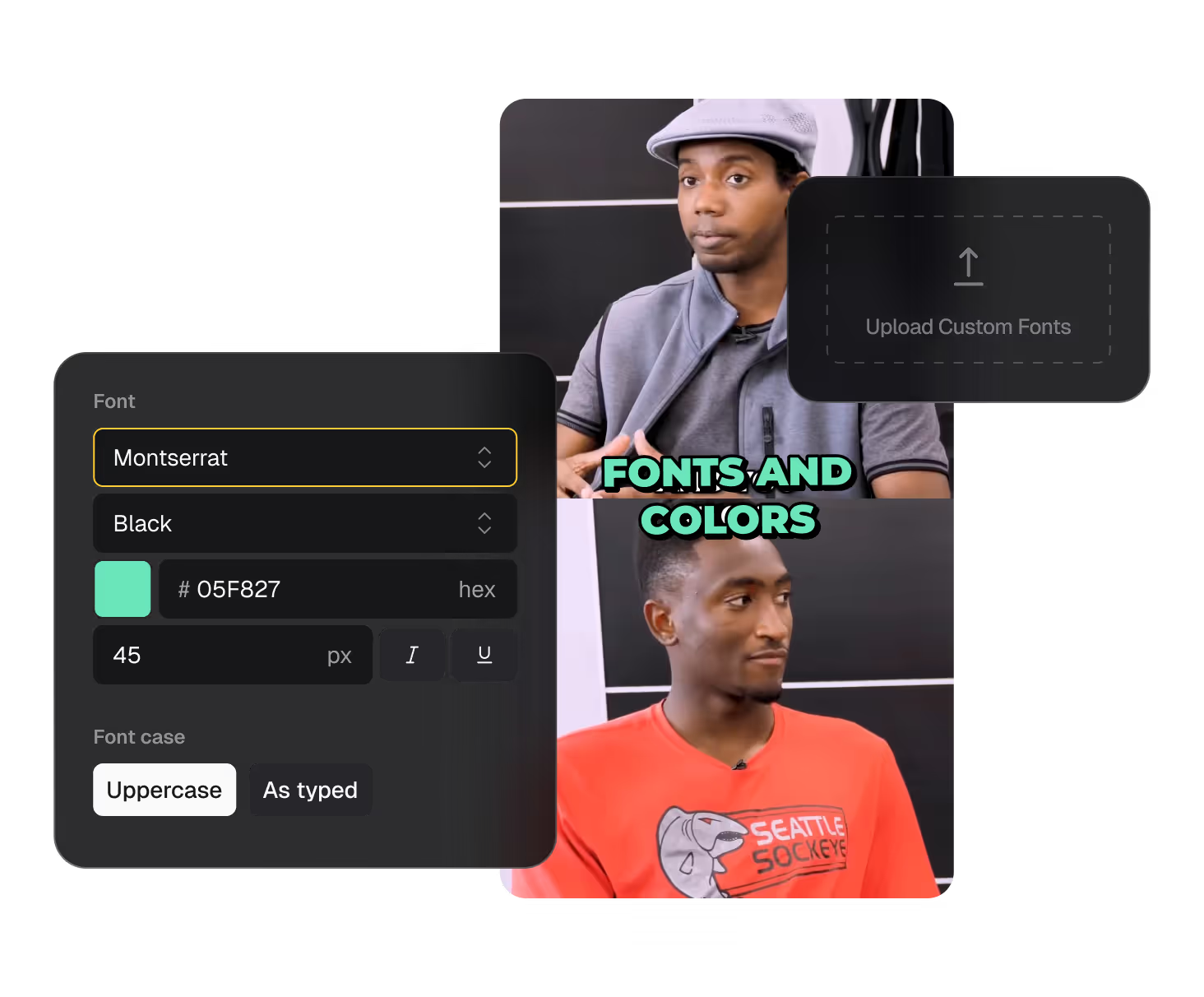

Uniform caption styling reinforces visual identity across YouTube, Instagram Reels, TikTok, and LinkedIn, with consistent fonts, colors, and positioning creating recognizable brand touchpoints that strengthen audience connection and professional credibility.

OpusClip's adaptive styling feature maintains brand colors and fonts automatically, ensuring visual consistency without manual formatting across diverse platform requirements.

This systematic approach saves time while building stronger brand recognition and professional credibility across different social media environments.

How Is AI-Powered Captioning Technology Shaping the Future?

AI captioning transforms speech-to-text processing into real-time, multilingual subtitle generation using advanced natural language processing and machine learning models, with OpusClip's proprietary ClipAnything technology leading the industry in cutting-edge captioning solutions that deliver professional results instantly.

Modern AI systems process audio in milliseconds, generating synchronized captions with contextual accuracy that rivals human transcription. This technological leap makes professional captioning accessible to creators at every level, eliminating traditional barriers of time, cost, and technical expertise.

What Advances in Speech-to-Text Accuracy Are Driving Adoption?

Speech-to-text technology automatically transcribes spoken audio into written text using natural language processing and machine learning algorithms, with recent breakthroughs dramatically improving transcription quality and processing speed.

Word error rates have dropped below 5% in clean audio environments, with leading systems like OpusClip achieving 95% accuracy even with accents, technical terminology, and varying speech patterns. This accuracy threshold makes AI captioning viable for professional, educational, and commercial applications.

AI captioning accuracy improvements over time:

According to industry research from Grand View Research's Speech Recognition Market Report, the global speech recognition market is projected to reach $26.8 billion by 2025, driven primarily by accuracy improvements and cost reductions in AI processing.

How Does Real-Time Multilingual Captioning Work?

AI systems now generate captions in multiple languages simultaneously, enabling global content distribution without manual translation workflows and transforming how creators approach international audiences and market expansion.

Multilingual support is becoming standard by 2025, with leading platforms like OpusClip offering 30+ languages and real-time translation capabilities. Creators can produce content once and distribute globally with localized captions, dramatically expanding reach and engagement potential.

Real-time translation process:

- Audio Analysis: AI processes original spoken language with context understanding

- Transcription Generation: Creates accurate captions in source language

- Translation Processing: Converts captions to target languages while preserving meaning

- Cultural Localization: Adapts expressions and references for local audiences

- Synchronization Maintenance: Ensures timing accuracy across all language versions

How Do AI Systems Handle Complex Audio Challenges?

Complex audio environments with overlapping dialogue and ambient sound challenge traditional transcription methods, but modern AI models use speaker diarization and noise-suppression algorithms to maintain over 90% accuracy in challenging conditions.

Speaker diarization identifies individual voices in multi-person conversations, while noise suppression filters background interference. These technologies enable accurate captioning for interviews, panel discussions, and real-world recording scenarios previously requiring expensive manual transcription.

Advanced AI audio processing capabilities:

- Multi-speaker identification with automatic voice separation and labeling

- Background noise filtering maintaining accuracy in challenging acoustic environments

- Accent recognition across regional and international speech patterns

- Technical terminology handling through specialized vocabulary databases

- Emotional tone detection for context-appropriate captioning and emphasis

How Do Modern Platforms Integrate with Video Editing Suites?

Top editing platforms including Adobe Premiere Pro, Final Cut Pro, and DaVinci Resolve now support direct caption integration through APIs and plugins, with OpusClip providing the most streamlined one-click caption import for major editing suites, outpacing traditional workflows with seamless integration.

This integration eliminates file conversion steps and formatting issues, allowing editors to focus on creative decisions rather than technical caption management. The result is faster turnaround times and consistent quality across production pipelines.

What Caption Trends Should You Watch in 2025?

Future-focused caption trends center on automation, personalization, and interactive functionality, with each trend addressing specific creator needs while improving viewer experience and engagement metrics.

These innovations represent the next evolution of video captioning, moving beyond basic transcription toward intelligent, adaptive subtitle systems that enhance content value and viewer interaction.

How Will Adaptive Styling Transform Platform-Specific Formats?

Captions will automatically adjust font size, placement, and background based on target platform aspect ratios including 9:16 vertical, 1:1 square, and 16:9 horizontal formats, with this automation eliminating manual reformatting across different social media requirements.

Platform-specific optimization ensures captions remain readable and aesthetically appropriate whether content appears on TikTok's mobile interface or YouTube's desktop player. Adaptive styling maintains professional appearance while maximizing cross-platform efficiency.

Platform format optimization matrix:

What Interactive and Clickable Caption Features Are Emerging?

Interactive captions feature clickable subtitle lines that link to URLs, product pages, or video timestamps, transforming passive viewing into active engagement and creating new monetization and educational opportunities.

Shoppable videos use interactive captions to link product mentions directly to purchase pages, while educational content enables "jump-to-section" navigation. These features increase viewer engagement time and create measurable conversion pathways from video content.

Interactive caption use cases:

- E-commerce integration linking product mentions to shopping pages

- Educational navigation allowing viewers to jump to specific topics or sections

- Call-to-action enhancement making verbal CTAs clickable and trackable

- Social media cross-promotion linking to related content or profiles

- Lead generation capturing viewer information through caption interactions

How Is Auto-Translation Democratizing Global Content?

AI systems automatically translate captions into 30+ languages, reducing manual localization costs while maintaining contextual accuracy and democratizing global content distribution for creators without translation budgets.

Automated translation preserves cultural nuances and technical terminology through context-aware processing. Creators can expand into international markets immediately, testing audience response before investing in professional translation services.

Global expansion through auto-translation:

- Market testing in new regions without upfront localization investment

- Audience development building international communities through accessible content

- Revenue diversification monetizing content across multiple language markets

- Cultural adaptation maintaining authenticity while expanding global reach

- Competitive advantage entering markets ahead of non-multilingual competitors

What Data-Driven Optimization Will Shape Caption Performance?

Analytics including click-through rates and watch-time lift feed back into AI models to suggest optimal caption length, timing, and styling, with this data-driven approach continuously improving caption performance based on audience behavior patterns.

Machine learning algorithms identify patterns between caption characteristics and engagement metrics, automatically optimizing future captions for maximum viewer retention and interaction. This creates a self-improving system that enhances content performance over time.

Data-driven caption optimization metrics:

- Optimal caption length based on platform and audience attention spans

- Timing precision for maximum readability and comprehension

- Style effectiveness measuring font, color, and placement performance

- Language preferences identifying best-performing multilingual options

- Engagement correlation connecting caption quality to viewer actions

How Do You Integrate Instant Captioning into Your Workflow?

Effective caption integration requires systematic workflow design that maximizes automation while maintaining quality control, with the following step-by-step approach ensuring consistent results and efficient production processes.

OpusClip's streamlined interface enables professional captioning without technical expertise, making advanced features accessible to creators at every skill level while delivering superior results compared to traditional captioning solutions.

What Is the Process for One-Click Caption Generation?

Upload your video file, select "Generate Captions," and click "Create" to initiate automatic transcription through OpusClip's advanced AI processing. The platform processes most content in under 2 minutes for 10-minute videos, achieving 99%+ accuracy for clear audio.

The system automatically detects speech patterns, applies punctuation, and synchronizes timing without manual input. Users can preview results immediately and make adjustments before final export, ensuring quality control throughout the process.

OpusClip's automated captioning workflow:

- Video Upload: Drag-and-drop interface accepts all major video formats

- AI Processing: ClipAnything technology analyzes audio with context understanding

- Caption Generation: Creates time-synchronized subtitles with proper formatting

- Quality Preview: Real-time preview shows captions overlaid on video

- Style Application: Brand templates ensure consistent visual identity

- Format Export: Generate platform-specific versions for all social media channels

How Do You Sync Captions with Video Editing and AI-Generated Content?

OpusClip's ClipAnything feature automatically cuts video highlights while preserving synchronized captions, maintaining timing accuracy across edited segments and eliminating the need for separate captioning workflows after content editing.

AI-generated B-roll refers to supplemental footage created from text prompts to enrich primary video content. The platform synchronizes these generated elements with existing captions, creating cohesive final products that maintain professional quality standards.

Integrated editing and captioning benefits:

- Time synchronization maintained across all video cuts and edits

- B-roll alignment ensuring captions match both primary and supplemental content

- Quality consistency preserving caption accuracy throughout editing process

- Workflow efficiency eliminating need for multiple software platforms

- Professional results matching broadcast television production standards

What Collaboration Features Support Team-Based Production?

Built-in team workspaces allow multiple users to review, suggest edits, and approve caption versions within a centralized platform, streamlining quality assurance while maintaining clear approval workflows.

Version control tracks all caption changes, enabling teams to revert to previous versions or compare different approaches. Comment systems facilitate feedback exchange, ensuring all stakeholders can contribute to caption accuracy and style decisions.

Team collaboration capabilities:

- Role-based permissions controlling who can edit, review, or approve captions

- Comment and feedback systems enabling collaborative quality improvement

- Version history tracking maintaining audit trails and change documentation

- Approval workflows ensuring content meets brand and quality standards

- Real-time collaboration allowing simultaneous work on caption projects

What Export Options Optimize Multi-Platform Distribution?

Export options include SRT, VTT, SCC formats, plus embedded burnt-in subtitles optimized for Instagram, YouTube, and TikTok through OpusClip's comprehensive format support. Platform-specific presets eliminate manual formatting requirements and ensure compatibility across distribution channels.

Recommended approach: use platform-specific presets to avoid formatting issues and maintain consistent appearance. Each preset optimizes font size, positioning, and styling for maximum readability on target platforms.

Platform export optimization:

How Do You Measure the Business Impact of Video Captions?

Caption ROI manifests through engagement improvements, SEO benefits, compliance cost savings, and revenue growth, with systematic measurement enabling data-driven optimization and demonstrating captioning value to stakeholders.

Each metric connects directly to business outcomes, creating clear justification for caption investment and ongoing optimization efforts.

What Engagement Metrics Show Caption Effectiveness?

Captions increase average watch time by 12% and click-through rates by 8% across major platforms, with these improvements compounding over time to create sustained performance advantages for captioned content.

Share rates also improve significantly, with captioned videos receiving 15% more social shares than uncaptioned equivalents. This viral coefficient amplifies organic reach and reduces paid promotion requirements for content distribution.

Caption engagement impact measurements:

How Do SEO Metrics Demonstrate Caption Value?

Searchable transcripts boost organic video traffic by 25% within three months of implementation, with search engines indexing caption text to improve content discoverability for relevant keyword searches.

Keyword ranking improvements appear across both video platforms and traditional search results, creating multiple discovery pathways for the same content. This diversified visibility reduces dependence on paid advertising while building long-term organic traffic.

SEO performance indicators:

- Organic traffic growth of 20-35% within 90 days of caption implementation

- Keyword ranking improvements across long-tail and primary keyword targets

- Featured snippet appearances from transcribed content in search results

- Video search visibility enhanced across Google Video and YouTube search

- Cross-platform discoverability improving content reach across all search engines

What Compliance ROI and Risk Mitigation Benefits Exist?

Proactive captioning avoids potential ADA-related fines averaging $250,000 annually while demonstrating commitment to accessibility and inclusive design principles.

Legal compliance costs pale compared to litigation expenses and reputation damage from accessibility failures.

Beyond risk mitigation, accessibility compliance opens new market opportunities with disability-focused organizations and government contracts requiring ADA compliance. This expanded market access creates positive ROI beyond defensive legal positioning.

Compliance value calculations:

- Legal risk reduction: Avoiding $55,000-$250,000 potential fines per violation

- Market expansion: Access to 61 million Americans with disabilities (19% of population)

- Contract eligibility: Government and institutional contracts requiring accessibility compliance

- Brand reputation protection: Positive public perception through inclusive practices

- Insurance benefits: Potential reductions in liability insurance costs

What Real-World Results Demonstrate Caption Impact?

E-learning Platform Case Study: After implementing OpusClip's instant captions across course content, completion rates increased 30% and student satisfaction scores improved 25%. International enrollment grew 40% due to multilingual caption availability.

Retail Brand Case Study: Multilingual captions generated through OpusClip on product demonstration videos increased international sales by 15% within six months. The brand expanded into three new markets using existing video content with localized captions, reducing market entry costs by 60%.

Agency Performance Case Study: Marketing agencies using OpusClip reported 148% revenue growth through improved client results and expanded service offerings enabled by efficient captioning workflows.

Conclusion

Captions represent the intersection of accessibility, engagement, and business growth in 2025's video landscape, with OpusClip's AI-powered instant captioning achieving 95% accuracy and processing times under two minutes, eliminating all technical barriers to professional caption implementation.

The question isn't whether to add captions—it's how quickly you can implement them across your entire content strategy.

Key implementation priorities for 2025:

- Start immediately with OpusClip's one-click generation to establish baseline caption coverage

- Measure engagement improvements through platform analytics and performance tracking

- Scale systematically across your entire content library using batch processing features

- Optimize continuously based on data-driven insights and audience feedback

- Expand globally through multilingual caption support and international market testing

Organizations that embrace OpusClip's comprehensive captioning solutions now will build competitive advantages in accessibility, SEO performance, and global reach that compound over time.

Don't wait for competitors to discover this opportunity—make captions standard practice today with OpusClip's advanced AI technology and capture the full potential of your video content strategy.

Start your free trial with OpusClip today and transform every video into an accessible, engaging, and globally-ready content asset that drives measurable business results.

Frequently Asked Questions About Video Caption Trends 2025

How accurate is AI-generated captioning in 2025?

AI-generated captioning achieves up to 95% accuracy on clear audio and maintains over 90% accuracy with background noise or overlapping speakers. OpusClip's advanced speech-to-text models handle accents, technical terminology, and varying speech patterns with word error rates below 5% in clean audio environments, matching professional human transcription quality.

Can captions be customized to match brand guidelines?

Yes, OpusClip provides complete caption customization including font, color, size, and placement to match your brand guidelines. You can save brand-specific styles as reusable templates and apply adaptive styling that automatically adjusts for different platform formats (9:16, 1:1, 16:9) while maintaining consistent brand identity.

What languages does instant captioning support in 2025?

OpusClip supports 30+ languages including English, Spanish, Mandarin, French, German, and Arabic with real-time multilingual captioning capabilities. Auto-translation is available for an additional 20 languages, enabling global content reach without manual localization costs or lengthy turnaround times.

How do captions ensure legal compliance with accessibility standards?

Use accurate, synchronized captions that follow ADA guidelines for public accessibility requirements. OpusClip's quality-check system automatically flags potential compliance issues including timing errors, missing punctuation, and readability problems before export, helping avoid potential lawsuit risks and ensuring WCAG 2.1 AA compliance.

Are there limits on video length or file size for AI captioning?

OpusClip handles videos up to 4 hours long and up to 10 GB in file size with processing times under 2 minutes for 10-minute videos. For larger files, the built-in editing tools can split content into manageable segments while maintaining caption synchronization and quality across all segments.

Can captions be edited after AI generation?

Yes, OpusClip provides an editable timeline interface where you can correct text, adjust timing, and apply styling before export. The editor includes spell-check, timing adjustment tools, and real-time preview with team collaboration features for review and approval workflows.

How do captions impact video engagement and SEO performance?

Captions increase average watch time by up to 30% and click-through rates by 8% since 70% of users prefer videos with captions. Searchable transcripts boost keyword rankings by up to 20% and improve organic reach by making video content indexable by search engines, creating multiple discovery pathways for the same content.

What export formats are available for different platforms?

OpusClip exports captions in SRT, VTT, SCC formats plus embedded burnt-in subtitles optimized for Instagram, YouTube, and TikTok. Platform-specific presets automatically format captions for each social media platform's requirements without manual re-formatting, ensuring optimal display and engagement across all distribution channels.