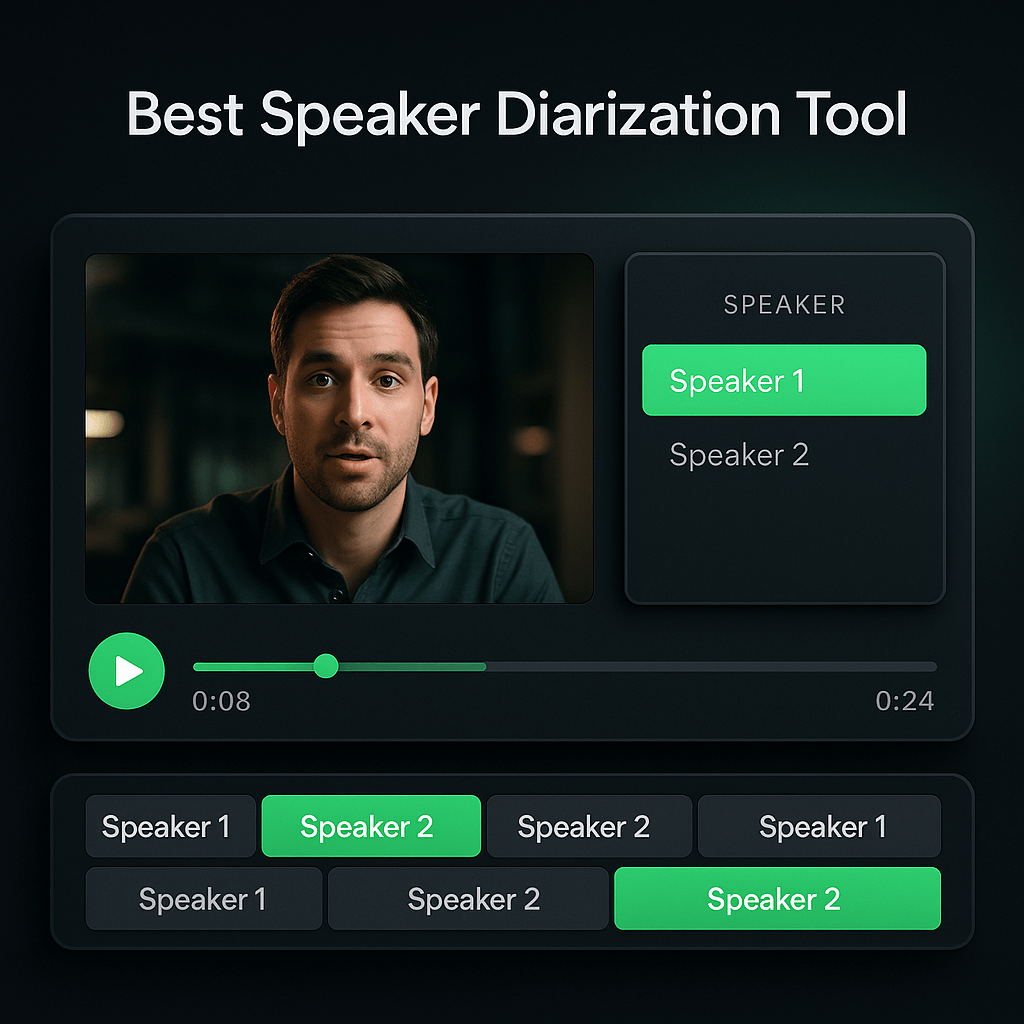

12 Best Speaker Diarization Tools for Multi-Speaker Video

I've spent countless hours editing interviews, podcasts, and panel discussions, and I can tell you that manually tracking who's speaking when is one of the most tedious tasks in video production. Speaker diarization technology has completely changed this workflow by automatically identifying and labeling different speakers in your recordings. Whether you're producing a two-person interview or a ten-person roundtable, the right diarization tool can save you hours of work and dramatically improve your content's accessibility and searchability.

In this guide, I'll walk you through the twelve best speaker diarization tools available today, covering everything from enterprise-grade platforms to creator-friendly solutions. You'll learn what features matter most, how accuracy varies across different tools, and which option fits your specific workflow and budget. By the end, you'll know exactly which tool can transform your multi-speaker content from a transcription nightmare into a streamlined production asset.

Key Takeaways

- Speaker diarization automatically identifies and labels different speakers in your videos, saving hours of manual work and improving transcript usability for interviews, podcasts, and panel discussions.

- Accuracy, integration capabilities, and language support are the three most important factors when choosing a diarization tool, with accuracy typically ranging from 80-95% depending on audio quality and speaker similarity.

- Enterprise solutions like Rev AI and AssemblyAI offer the highest accuracy and scalability, while creator-focused platforms like Descript and OpusClip provide integrated workflows that combine diarization with editing and clip creation.

- Open-source tools like Pyannote.audio provide free, customizable diarization for technical users, offering the best cost-to-performance ratio when you have development resources available.

- Proper audio preparation, including noise reduction and separate microphones per speaker, dramatically improves diarization accuracy and reduces the time needed for manual corrections.

- Building speaker libraries for recurring guests enables automatic name labeling over time, making diarization increasingly efficient as your content library grows.

What Is Speaker Diarization and Why Does It Matter?

Speaker diarization is the process of partitioning an audio or video stream into segments according to speaker identity. In simpler terms, it answers the question "who spoke when?" by automatically detecting when different speakers talk and labeling those segments accordingly. This technology uses acoustic features like pitch, tone, and speaking patterns to distinguish between voices, even when speakers overlap or interrupt each other.

For content creators and marketers, speaker diarization solves several critical problems. First, it makes transcripts infinitely more useful by attributing quotes to specific speakers, which is essential for interviews, testimonials, and educational content. Second, it enables precise editing workflows where you can quickly locate and extract specific speaker segments. Third, it improves accessibility by creating more accurate captions that indicate speaker changes, helping viewers follow complex conversations.

The technology has advanced dramatically in recent years thanks to machine learning improvements. Modern diarization systems can handle challenging scenarios like background noise, multiple simultaneous speakers, and varying audio quality. When you're repurposing long-form content into clips or creating searchable video libraries, speaker diarization becomes the foundation that makes everything else possible. Tools like OpusClip leverage this technology to help you identify the most engaging moments from each speaker and automatically create clips with properly attributed captions.

Key Features to Look for in Speaker Diarization Tools

Not all speaker diarization tools are created equal, and understanding the key differentiators will help you choose the right solution. The most important feature is accuracy, specifically how well the tool distinguishes between similar-sounding voices and handles speaker overlaps. Look for tools that specify their word error rate (WER) and diarization error rate (DER), with lower percentages indicating better performance.

Integration capabilities matter tremendously for workflow efficiency. The best tools connect seamlessly with your existing video editing software, content management systems, or social media platforms. Some offer API access for custom integrations, while others provide native plugins for popular platforms like Adobe Premiere or Final Cut Pro. Consider whether you need real-time diarization for live events or if batch processing of recorded content is sufficient for your needs.

Speaker Identification vs. Speaker Recognition

It's important to understand the difference between speaker identification and speaker recognition. Speaker identification (what most diarization tools do) simply labels speakers as "Speaker 1," "Speaker 2," and so on, distinguishing between different voices without knowing who they are. Speaker recognition goes further by matching voices to known speaker profiles, automatically labeling segments as "John Smith" or "Jane Doe." Some advanced tools offer both capabilities, allowing you to build speaker libraries for recurring guests or team members. This feature is particularly valuable for podcast series, interview shows, or corporate training content where the same speakers appear regularly.

Language and Accent Support

Language support varies significantly across diarization tools. While most handle English well, accuracy can drop substantially with other languages or strong accents. If you create multilingual content or work with diverse speakers, verify that your chosen tool supports the specific languages and dialects you need. Some tools use language-specific acoustic models that dramatically improve accuracy, while others apply a one-size-fits-all approach that may struggle with non-standard speech patterns. Additionally, consider whether the tool can handle code-switching, where speakers alternate between languages within the same conversation.

The 12 Best Speaker Diarization Tools Compared

After testing dozens of solutions and analyzing user feedback from thousands of creators, I've identified the twelve tools that consistently deliver the best results for multi-speaker video content. Each tool has distinct strengths, and the right choice depends on your specific use case, budget, and technical requirements. I've organized these tools by their primary use cases to help you quickly identify which ones deserve your closer attention.

Enterprise-Grade Solutions

Rev AI stands out as one of the most accurate commercial diarization services available, with a diarization error rate consistently below 10% in controlled conditions. The platform offers both asynchronous API access and a web interface, making it suitable for developers and non-technical users alike. Rev AI supports over 30 languages and provides speaker identification for up to 10 speakers per file. Pricing starts at $0.04 per minute for transcription with diarization, which is competitive for the accuracy level delivered. The main limitation is that it requires uploading your content to Rev's servers, which may not work for sensitive or confidential recordings.

AssemblyAI has gained significant traction among developers for its comprehensive speech-to-text API that includes advanced diarization capabilities. What sets AssemblyAI apart is its speaker labels feature that can identify an unlimited number of speakers, making it ideal for large panel discussions or conference recordings. The API returns timestamps for each speaker segment, making it easy to integrate with video editing workflows. Pricing is usage-based at $0.00025 per second ($0.015 per minute), with volume discounts available. The platform also offers additional features like sentiment analysis and content moderation that can be applied per speaker, adding valuable context to your transcripts.

AWS Transcribe is Amazon's cloud-based speech recognition service that includes speaker diarization as part of its feature set. It can identify between 2 and 10 speakers and integrates seamlessly with other AWS services, making it powerful for organizations already using Amazon's cloud infrastructure. The accuracy is solid, though not quite at Rev AI's level, and it supports over 30 languages. Pricing is $0.024 per minute for standard transcription with diarization, with additional charges for custom vocabulary and real-time processing. The learning curve is steeper than standalone tools, but the scalability and integration options make it worthwhile for technical teams handling large volumes of content.

Creator-Focused Platforms

OpusClip combines speaker diarization with AI-powered clip creation, making it exceptionally valuable for repurposing long-form content. The platform automatically identifies different speakers in your videos and uses that information to find the most engaging moments from each person. When you're creating clips from interviews or panel discussions, OpusClip ensures that speaker transitions are clean and that captions accurately reflect who's talking. This is particularly useful for social media content where properly attributed quotes build credibility. The AI understands context across speaker changes, so it can create clips that include relevant setup from one speaker and the punchline from another, maintaining narrative flow.

Descript has revolutionized video editing by treating transcripts as the primary editing interface, and its speaker detection is remarkably accurate. The platform automatically identifies speakers and allows you to label them with names, making it easy to search for specific people's contributions across multiple projects. Descript's overdub feature even lets you correct speaker segments by typing, with AI-generated voice matching the original speaker. Plans start at $12 per month for creators, with unlimited transcription included. The main advantage is the integrated workflow where diarization, transcription, and editing happen in one place, eliminating the need to export and import between tools.

Otter.ai specializes in meeting transcription but works excellently for any multi-speaker video content. The platform learns to recognize individual voices over time, automatically labeling speakers by name after you identify them once. Otter's real-time transcription capability makes it valuable for live events or webinars where you need immediate speaker-attributed captions. The free plan includes 600 minutes per month, while paid plans start at $8.33 per month with advanced features like custom vocabulary. The mobile app is particularly well-designed, making it easy to record and transcribe on-location interviews with automatic speaker separation.

Open-Source and Developer Tools

Pyannote.audio is an open-source toolkit built on PyTorch that provides state-of-the-art speaker diarization capabilities. It's completely free and offers the most flexibility for developers who want to customize the diarization pipeline or integrate it into existing applications. The accuracy rivals commercial solutions when properly configured, and it supports unlimited speakers. However, it requires significant technical expertise to implement, including knowledge of Python, machine learning concepts, and audio processing. For teams with development resources, Pyannote.audio offers the best cost-to-performance ratio and complete control over data privacy since everything runs on your own infrastructure.

Whisper by OpenAI is primarily a speech recognition model, but when combined with additional processing, it can perform speaker diarization. The model is open-source and can run locally, making it ideal for privacy-sensitive applications. Whisper's transcription accuracy is exceptional, and several community projects have built diarization layers on top of it. The main challenge is that you'll need to implement or integrate separate diarization logic, as Whisper itself doesn't distinguish speakers out of the box. For technical users comfortable with Python, combining Whisper with Pyannote.audio creates a powerful, cost-free solution.

Specialized and Niche Solutions

Sonix offers fast, accurate transcription with speaker identification across 40+ languages. What makes Sonix particularly useful is its media player that syncs the transcript with your video, allowing you to click any word and jump to that moment. The speaker labels are color-coded in the transcript, making it easy to visually scan for specific speakers. Pricing starts at $10 per hour of transcription, with monthly subscription options available. Sonix also provides translation services, so you can transcribe with diarization in one language and translate to another while maintaining speaker labels.

Trint combines transcription, diarization, and collaborative editing in a platform designed for journalists and media professionals. The speaker identification is accurate, and the interface makes it easy to verify and correct speaker labels. Trint's standout feature is its collaboration tools, allowing multiple team members to highlight, comment, and edit transcripts simultaneously. This is particularly valuable for production teams working on documentary content or investigative pieces where multiple people need to review speaker-attributed content. Pricing starts at $48 per month for individuals, positioning it as a premium solution.

Happy Scribe provides transcription and subtitling services with solid speaker diarization capabilities. The platform supports 120+ languages and offers both automatic and human-made transcription options. For diarization specifically, the automatic service identifies speakers and allows you to rename them in the editor. The interface is intuitive, making it accessible for non-technical users. Pricing is pay-as-you-go at €0.20 per minute or subscription plans starting at €17 per month. Happy Scribe's subtitle editor is particularly well-designed, making it easy to create speaker-attributed captions for social media videos.

Simon Says is designed specifically for video professionals, offering transcription and diarization that integrates directly with Adobe Premiere Pro, Final Cut Pro, and Avid Media Composer. The speaker identification creates separate tracks or markers in your editing timeline, dramatically speeding up the editing process for multi-speaker content. Simon Says supports 100+ languages and provides industry-specific vocabulary for better accuracy in specialized fields. Pricing is credit-based, with one hour of transcription costing approximately $15. For professional editors working in traditional NLE software, the tight integration makes Simon Says worth the premium price.

How to Choose the Right Speaker Diarization Tool

Selecting the best speaker diarization tool requires evaluating your specific needs across several dimensions. Start by assessing your content volume and frequency. If you're processing hours of content daily, a subscription-based service with unlimited usage makes more financial sense than pay-per-minute options. Conversely, if you only need diarization occasionally, pay-as-you-go pricing prevents you from paying for capacity you don't use.

Consider your technical capabilities and workflow preferences. Non-technical creators benefit most from all-in-one platforms like Descript or OpusClip that handle diarization, editing, and export in a single interface. If you have development resources, API-based solutions like AssemblyAI or open-source tools like Pyannote.audio offer more flexibility and potentially lower long-term costs. Think about where diarization fits in your content pipeline and whether you need real-time processing or if batch processing overnight is acceptable.

Accuracy Requirements and Testing

Accuracy needs vary based on your content type and end use. For legal depositions or medical transcriptions, you need near-perfect accuracy and may want to combine automated diarization with human review. For social media clips or internal meetings, slightly lower accuracy is acceptable if it means faster turnaround and lower costs. Most tools offer free trials or credits, so test them with your actual content rather than relying solely on vendor claims. Pay attention to how well each tool handles your specific challenges, whether that's accents, technical jargon, poor audio quality, or frequent speaker interruptions.

Privacy and Data Security Considerations

Data privacy is increasingly important, especially if you're handling confidential business discussions, unreleased content, or personally identifiable information. Cloud-based services require uploading your audio or video to their servers, which may violate confidentiality agreements or regulatory requirements. If privacy is a concern, prioritize tools that offer on-premise deployment or local processing, such as Pyannote.audio or locally-run Whisper implementations. Review each vendor's data retention policies and whether they use your content to train their models. Some services offer GDPR-compliant or HIPAA-compliant options for an additional fee, which may be necessary depending on your industry and location.

Step-by-Step Guide to Implementing Speaker Diarization

Implementing speaker diarization in your workflow is straightforward once you understand the basic process. Here's how to get started regardless of which tool you choose.

Step 1: Prepare Your Audio or Video File. Before uploading to any diarization service, ensure your file meets the technical requirements. Most tools work best with clear audio where speakers don't overlap excessively. If possible, use source files rather than compressed versions, as higher audio quality directly improves diarization accuracy. Check that your file format is supported; most tools accept MP3, WAV, MP4, and MOV, but some have restrictions. If you're working with very long files, consider splitting them into segments of 1-2 hours, as this often improves processing speed and accuracy.

Step 2: Upload and Configure Diarization Settings. When uploading your file, specify the number of speakers if the tool asks. Providing an accurate speaker count significantly improves results; if you're unsure, it's better to overestimate slightly. Some tools offer advanced settings like minimum speaker duration or sensitivity thresholds. For most use cases, default settings work well, but if you're dealing with rapid speaker changes or very short interjections, adjusting these parameters can help. Enable any additional features you need, such as custom vocabulary for proper nouns or technical terms that appear frequently in your content.

Step 3: Review and Correct Speaker Labels. Once processing completes, review the diarization results carefully. Most tools achieve 80-95% accuracy, meaning you'll likely need to correct some speaker boundaries or merge incorrectly split segments. Start by labeling the speakers with actual names rather than generic labels; this makes subsequent editing much easier. Look for patterns in errors, such as one speaker consistently being split into two labels, which you can fix by merging those speaker IDs. This review process typically takes 10-20% of the original content length, which is still far faster than manual speaker tracking.

Step 4: Export and Integrate with Your Workflow. After finalizing speaker labels, export the results in a format that works with your editing tools. Most diarization platforms offer multiple export options including plain text transcripts with speaker labels, SRT or VTT caption files with speaker names, or JSON files with detailed timestamp data. If you're using the diarization for video editing, look for tools that export directly to your NLE as markers or separate tracks. For content repurposing workflows, having speaker-attributed transcripts makes it easy to extract quotes, create show notes, or generate social media content that properly credits each speaker.

Step 5: Build a Speaker Library for Recurring Guests. If you regularly work with the same speakers, invest time in creating speaker profiles or voice prints. Tools like Otter.ai and Descript learn to recognize voices over time, automatically applying correct names without manual labeling. This upfront investment pays dividends as your content library grows. For podcast series or interview shows, maintaining a speaker database means new episodes require minimal manual correction. Some enterprise tools even allow you to share speaker libraries across team members, ensuring consistency in how speakers are identified across different projects and editors.

Common Speaker Diarization Challenges and Solutions

Even the best speaker diarization tools struggle with certain scenarios, and understanding these limitations helps you work around them. One of the most common challenges is overlapping speech, where multiple people talk simultaneously. Most diarization systems assign overlapping segments to whichever speaker is louder or started first, which can create confusing transcripts. The best solution is to improve your recording technique by using separate microphones for each speaker when possible, or by establishing clearer turn-taking protocols during recording.

Similar-sounding voices pose another significant challenge, particularly when speakers share the same gender, age range, and accent. Diarization algorithms rely on acoustic differences to distinguish speakers, so when those differences are minimal, accuracy drops. You can improve results by ensuring each speaker uses a different microphone or recording position, which creates subtle acoustic signatures beyond just voice characteristics. During the review phase, pay extra attention to segments where similar-sounding speakers interact, as these are most likely to contain errors.

Handling Poor Audio Quality

Background noise, echo, and low-quality recording equipment all degrade diarization accuracy. While you can't retroactively improve audio quality, you can take steps to minimize its impact. Use audio cleanup tools like noise reduction or echo cancellation before running diarization, as cleaner audio produces better results. Some diarization platforms include preprocessing options that automatically enhance audio quality. For future recordings, invest in better microphones and recording environments, as the time saved in post-production quickly justifies the equipment cost. When working with unavoidably poor audio, consider using multiple diarization tools and comparing results, as different algorithms handle noise differently.

Managing Large Numbers of Speakers

Panel discussions, conferences, and roundtable conversations with many speakers challenge even advanced diarization systems. Most tools have practical limits of 10-20 speakers before accuracy degrades significantly. For events with more speakers, consider breaking the content into smaller segments focused on specific conversations or sessions. Another approach is to use a two-pass system where you first identify major speakers who talk frequently, then manually handle brief contributions from occasional speakers. When creating clips from multi-speaker content, tools like OpusClip help by focusing on the most engaging segments, which typically involve fewer simultaneous speakers and are easier to diarize accurately.

Frequently Asked Questions

How accurate is automated speaker diarization compared to manual labeling? Modern speaker diarization tools achieve 80-95% accuracy in optimal conditions with clear audio and distinct voices. This means you'll still need to review and correct results, but the process takes about 10-20% of the time required for completely manual speaker tracking. Accuracy drops with poor audio quality, similar-sounding voices, or frequent speaker overlaps. For critical applications like legal transcripts, combining automated diarization with human review provides the best balance of speed and accuracy.

Can speaker diarization tools identify speakers by name automatically? Most diarization tools initially label speakers generically as Speaker 1, Speaker 2, etc., requiring you to manually assign names. However, advanced tools like Otter.ai and Descript can learn to recognize specific voices over time, automatically applying names to recurring speakers. This speaker recognition capability requires building a voice profile for each person, typically by labeling them in 2-3 recordings. Enterprise solutions may offer voice enrollment features where speakers record a brief sample specifically for identification purposes.

What's the difference between speaker diarization and speech-to-text transcription? Speech-to-text transcription converts spoken words into written text, while speaker diarization identifies who spoke when. They're complementary technologies that work together to create speaker-attributed transcripts. Most modern transcription services include basic diarization, but the quality varies significantly. When evaluating tools, look at both transcription accuracy (word error rate) and diarization accuracy (diarization error rate) separately, as a tool might excel at one while being mediocre at the other.

How many speakers can diarization tools handle effectively? Most commercial diarization tools work best with 2-10 speakers, with accuracy declining as speaker count increases. Some advanced platforms like AssemblyAI claim to handle unlimited speakers, but practical accuracy remains highest with fewer than 20 distinct voices. For large events with many speakers, consider whether you need to identify every brief comment or can focus on primary speakers who contribute substantially. Breaking long recordings into smaller segments with fewer active speakers also improves results.

Do speaker diarization tools work with video calls and webinars? Yes, most diarization tools work well with recorded video calls from platforms like Zoom, Teams, or Google Meet. However, accuracy can be affected by compression artifacts and varying audio quality across participants. For best results, record locally rather than relying on platform recordings, use separate audio tracks for each participant if available, and ensure all participants use good microphones. Some tools like Otter.ai offer direct integrations with video conferencing platforms for real-time diarization during calls.

Can I use speaker diarization for content in multiple languages? Language support varies significantly across diarization tools. While most handle English well, accuracy for other languages depends on whether the tool uses language-specific acoustic models. Tools like Sonix and Happy Scribe support 40+ languages with dedicated models for each. If you create multilingual content or work with speakers who code-switch between languages, test your specific language combinations during trial periods, as performance can vary substantially. Some tools handle the diarization well but struggle with transcription accuracy in less common languages.

How does speaker diarization handle background music or noise? Background music and noise significantly challenge diarization systems, as they can be misidentified as additional speakers or mask the acoustic features used to distinguish voices. Most tools include preprocessing to filter out non-speech audio, but heavy background noise reduces accuracy. For content with music beds or ambient sound, consider using audio editing software to create a clean voice-only track for diarization, then apply the speaker labels back to your original mixed audio. This extra step substantially improves results when working with challenging audio environments.

Start Creating Better Multi-Speaker Content Today

Speaker diarization has transformed from a specialized technology into an essential tool for anyone working with multi-speaker video content. Whether you're producing podcasts, conducting interviews, recording webinars, or capturing panel discussions, the right diarization tool eliminates hours of tedious manual work while making your content more accessible and searchable. The twelve tools I've covered offer solutions for every budget and technical skill level, from enterprise APIs to creator-friendly platforms.

The key is to start experimenting with these tools using your actual content. Take advantage of free trials to test accuracy with your specific speakers, audio quality, and content format. Pay attention not just to the diarization accuracy but to how well each tool integrates with your existing workflow. The best tool is the one you'll actually use consistently, not necessarily the one with the most features or highest accuracy in lab conditions.

If you're looking to take your multi-speaker content further, consider how speaker diarization fits into a broader repurposing strategy. Tools like OpusClip combine diarization with AI-powered clip creation, automatically identifying the most engaging moments from each speaker and creating shareable clips with accurate, speaker-attributed captions. This integrated approach means you're not just transcribing your content but actively transforming it into assets that drive engagement across social platforms. Try OpusClip today and see how speaker diarization can become the foundation of a more efficient, effective content workflow that turns every conversation into multiple high-performing pieces of content.